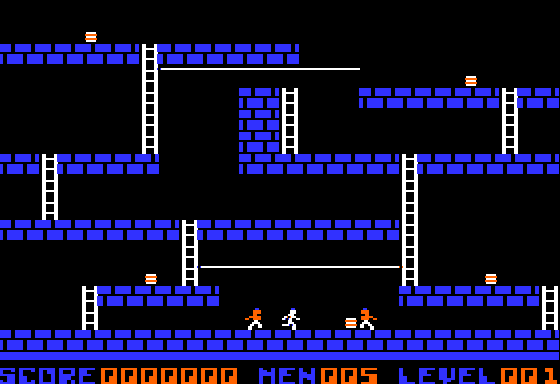

Ever since I got my first computer (yup, you know the one ![]() ) I've been fascinated and intrigued by all those cool game sound effects, despite not having a dedicated sound chip, but according to an article I recently came across ("Making the Apple II sing") it's essentially just a 1-bit square wave sound generator!

) I've been fascinated and intrigued by all those cool game sound effects, despite not having a dedicated sound chip, but according to an article I recently came across ("Making the Apple II sing") it's essentially just a 1-bit square wave sound generator!

So what's the secret behind sound effects in games such as Lode runner, Gumball, Choplifter and Ms. Pacman (to name just a few)?

Given that the sound generating hardware is so simple I gather it has more to do with the sequencing (i.e. note-pitch, note-length, tempo and placement of those notes in relation to each other). Recording those sound effects, then time-stretching to slow them down helps, but many of them are played so quickly and with such short notes it seems, that it's hard to audibly make out how the sound effects are constructed.

Perhaps someone can shed some light on the matter?

Short answer is that it's not just square waves. That would only create one frequency note (plus its odd harmonics).

The key to more sophisticated audio effects is to use pulse-width modulation. This can create much more intricate waveforms especially after the normal filtering effects of the Apple speaker circuit. Depending on how complex the software is, you can expect to achieve multi-voice harmonies and acceptable speech synthesis with just a 1-bit toggling speaker citcuit!

Check this out.

You've got $C030 accesses and time in microseconds. Machine language, cycle counting all to fiddle with the timing between the clicks of the speaker.

Check this out.

"1-bit" audio generation is not the limitation it seems. The highest fidelity systems like DSD use 1-bit sampling at multi-MHz rates.

More modestly, there are demos on home computers that play back 4-channel sampled tracks through the "1-bit" speaker channel.

And there are oddball art projects that create some quite interesting music by toggling a single pin on a microcontroller.

When I beat games back in the 80's, I then reverse-engineered as a second challenge. So I learned how two of your listed examples worked by deciphering their machine code...

Thanks for the link to Paul Lutus' Electric Duet. It brought back some great memories and reaquainteed me with Paul's great contributions.

So basically the pulse width modulation allows for more waveforms which certainly changes the game. I'm sure there's more to it than that, but that takes care of the audio source (i.e. "instrument sound").

One thing I've done is to time-stretch (i.e. change the speed while keeping the pitch) of some game sound effects (using the time-stretch feature in Apple's Logic Pro X running on a Mac). Here are a few examples (audio taken from an Apple ][+ speaker while playing various games:

Lode Runner 1

LodeRunner_SFX1.mp3

LodeRunner_SFX1_time-stretched.mp3

Lode Runner 2

LodeRunner_SFX2.mp3

LodeRunner_SFX2_time-stretched.mp3

Lode Runner 5

LodeRunner_SFX5.mp3

LodeRunner_SFX5_time-stretched.mp3

Gumball 1

Gumball 1.mp3

Gumball 1_time-stretched.mp3

Gumball 2

Gumball 2.mp3

Gumball 2_time-stretched.mp3

Gumball 3

Gumball 3.mp3

Gumball 3_time-stretched.mp3

Gumball 6

Gumball 6.mp3

Gumball 6_time-stretched.mp3

Ms. Pac-Man 1

Ms. Pac-Man 1.mp3

Ms. Pac-Man 1_time-stretched.mp3

Ms. Pac-Man 2

Ms. Pac-Man 2.mp3

Ms. Pac-Man 2_time-stretched.mp3

Ms. Pac-Man 3

Ms. Pac-Man 3.mp3

Ms. Pac-Man 3_time-stretched.mp3

Ms. Pac-Man 4

Ms. Pac-Man 4.mp3

Ms. Pac-Man 4_time-stretched.mp3

Ms. Pac-Man 5

Ms. Pac-Man 5.mp3

Ms. Pac-Man 5_time-stretched.mp3

Ms. Pac-Man 6b

Ms. Pac-Man 6b.mp3

Ms. Pac-Man 6b_time-stretched.mp3

.

Wow! That's pretty cool.

Did you manage to extract the sequences ("melodies") from those games?

Regardless of having that data converted into a MIDI file or having to manually enter the information (note, duration, next note (or pause), duration etc.) it would be very useful for learning how they were constructed.

No, I didn't think of extracting the melodies. I enjoyed making it replay its own songs, but I only disassembled the subroutines that actually played the musical notes out of the speaker. And I "borrowed" their ideas to make my own music and sound effect routines.

This YouTube clip from Moon Patrol illustrates several aspects of Apple sound fx. The background melody is played in tiny staccato pulses. As the buggy passes checkpoint Y, the program has to do more animation work, so the pulses of music become audibly sparser (and slower). Then when the buggy reaches checkpoint Z, all animation stops in order to play a lively duet using the sound routine explained below.

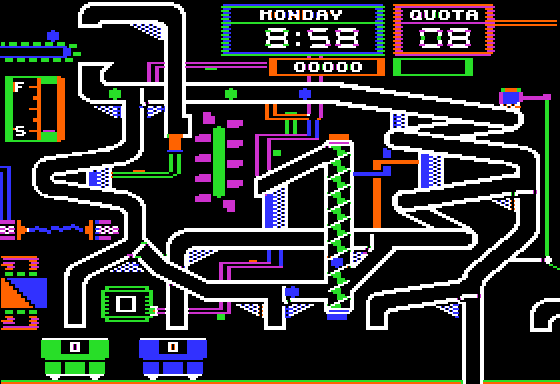

Around 1984 I disassembled the two-voice music subroutine Atarisoft had used in Moon Patrol -- it was the same subroutine that Ms Pacman used in your "Ms. Pac-Man 1" sample. Here's an illustration of how its algorithm works, as far as I remember...

Suppose Atarisoft's "duet" music routine was trying to play two musical pitches illustrated by the green square wave and the purple square wave. The duet routine doesn't just blindly pulse the speaker for both square waves because it would create a chaotic jumble of extra edges, sounding nothing like either melody. Instead, the routine pays attention to whether each edge is "rising" or "falling". For instance, the first time the green wave rises, the routine pulses the speaker to make it rise too. But then when the purple edge rises, the routine does not pulse the speaker because it has already generated a rising edge and doesn't need another rising edge.

Square waves.png

This would then cause an illusion of what a dual oscillator synthesizer would be capable of: 2 separate oscillators which are slightly detuned from each other, and being played at the same time and thereby resulting in a "fat" and "big" sound.

Is it safe to assume that the Apple II (with its original hardware) was only capable of playing one channel of sound at once (i.e. similar to a single monophonic synthesizer), hence the need to use tricks like this in order to make it sound bigger and with different melodies or sounds playing at once?